Dictators constantly face a dilemma: crushing dissent to terrify (but anger) the populace or tolerating protests and offering reforms to keep the public at bay (but embolden dissidents in the process). Instead of relying on gut instinct, experience, or historical precedent, autocrats now have advances in data analytics and ubiquitous passive data to thank for letting them develop new, scientifically validated methods of repression. By analyzing the dynamics of resistance with a depth previously impossible, autocrats can preemptively crush dissent more reliably and carefully.

With machine learning and social network analysis, dictators can identify future troublemakers far more efficiently than through human intuition alone. Predictive technologies have outperformed their human counterparts: a project from Telenor Research and MIT Media Lab used machine-learning techniques to develop an algorithm for targeted marketing, pitting their algorithm against a team of topflight marketers from a large Asian telecom firm. The algorithm used a combination of their targets’ social networks and phone metadata, while the human team relied on its tried-and-true methods. Not only was the algorithm almost 13 times more successful at selecting initial purchasers of the cell phone plans, their purchasers were 98 percent more likely to keep their plans after the first month (as opposed to the marketers’ 37 percent).

Comparable algorithms to target people differently have shown promise somewhat more ominously. For example, advanced social network algorithms developed by the U.S. Navy are already being applied to identify key street gang members in Chicagoand municipalities in Massachusetts. Algorithms like these detect, map, and analyze the social networks of people of interest (either the alleged perpetrators or victims of crimes). In Chicago, they have been used to identify those most likely to be involved in violence, allowing police to then reach out to their family and friends in order to socially leverage them against violence. The data for the models can come from a variety of sources, including social media, phone records, arrest records, and anything else to which the police have access. Some software programs along this line also integrate geo-tags from the other data in order to create a geographic map of events. Programs like these have proven effective in evaluating the competence of Syrian opposition groups, in identifying improvised explosive device creation and distribution networks in Iraq, in helping police target gangs, and in helping police better target criminal suspects for investigation.

Related breakthroughs in computer algorithms have proven effective in forecasting future civil unrest. Since November 2012, computer scientists have worked on Early Model Based Event Recognition using Surrogates (EMBERS), an algorithm developed with funding from the Intelligence Advanced Research Projects Activity that uses publicly available tweets, blog posts, and other factors to forecast protests and riots in South America. By 2014, it forecasted events at least a week in advance with impressive accuracy. The algorithm learned steadily from its successes and failures, adjusting how it weighed variables and data with each successive attempt.

In Russia, the pro-Kremlin Center for Research in Legitimacy and Political Protest think tank claims to have developed a similar software system, called Laplace’s Demon, which monitors social media activity for signs of protest. According to the center’s head, Yevgeny Venediktov, social scientists, researchers, government officials, and law enforcement agencies that use the system “will be able to learn about the preparation of unsanctioned rallies long before the information will appear in the media.” Venediktov considers the tool a vital security measure for curbing protests, stating, “We are now facing a serious cyber threat—the mobilization of protest activists in Russia by forces located abroad,” necessitating “active and urgent measures to create a Russian system of monitoring social networks and [develop] software that would warn Russian society in advance about approaching threats.”Authoritarian governments, of course, have access to much more data about their citizens than a telecom company, a local police department, or Laplace’s Demon could ever hope for, making it all the easier for them to ensure that their people never escape the quiet surveillance web of trouble-spotting algorithms. Classic Orwellian standbys such as wiretapping, collecting communication metadata, watching public areas through cameras (with ever-improving facial recognition), monitoring online activity (especially true in China, which has direct control over network providers and surveillance tools built directly into social media services), tracking purchase records, scanning official government records, and hacking into any computer files that cannot be accessed directly will become only more effective through the use of new technologies and algorithms.

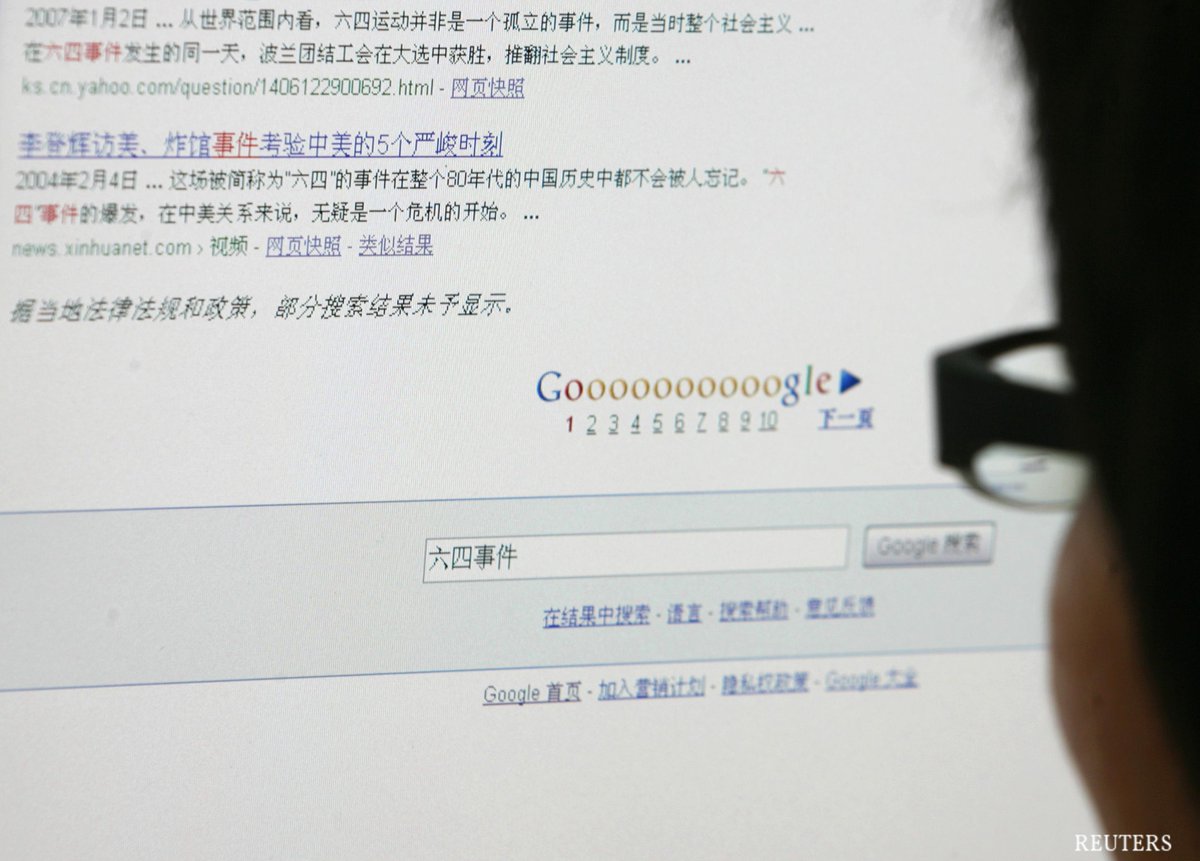

There are many new surveillance methods available at the touch of a button. For example, former Ukraine President Viktor Yanukovych sent a passive-oppressive mass text to those near a protest, warning them that they were registered as participants in a mass riot. Governments can monitor their citizens’ locations through their phones, and the future of tracking people through wearable computers and smart appliances is still on the way. With a steady stream of data available from nearly every citizen, automated sifters such as EMBERS can steadily learn which data are valuable and prioritize appropriately. Machines have already shown that they are competent in deriving a variety of private traits through Facebook likes,using social media profiles to forecast whether groups will stick together, identifying personality traits through phone data and Twitter activity, determining the stage of one’s pregnancy through purchase behavior, or ascertaining how likely one is to take a prescribed medication based on a variety of seemingly unrelated factors.At the same time that mass surveillance is becoming less obtrusive, outright mass censorship, once a standby tool of repressive regimes worldwide, may have a more effective alternative thanks to analytics. Not only can blatant censorship provoke a backlash, it also complicates the ability of states to monitor their people by encouraging them either to use communication channels that are harder to watch or to figure out how to cleverly evade notice by using coded language or symbols. The Grass-Mud Horse, for example, is an entirely fictional creature popularized on the Chinese Internet because its pronunciation sounds like an incredibly vulgar swear word that the Communist Party’s automatic censors would normally catch. However, the text itself seems harmless, so the censors couldn’t easily clamp down on it. Indeed, Chinese Internet users have developed very extensive systems of codephrases to evade the automated detection of certain sentiments.

Authoritarian regimes have been getting smarter at how they influence the public dialogue. Russia boasts a well-organized army of paid anonymous online commenters. These agents seek to covertly influence opinion both internally and internationally, posting on Russian and English forums and social media outlets that feature news about the nation, items on Ukraine, or criticisms of Russian President Vladimir Putin. Reports also link the group to attempts to manipulate global opinion of U.S. President Barack Obama, as well as the perpetuation of several serious online hoaxes, including false reports in the United States of a chemical plant explosion, an Ebola virus outbreak, and the lethal shooting of an unarmed black woman by police in the wake of the shooting in Ferguson, Mo.Authoritarian regimes have been getting smarter at how they influence the public dialogue.China pioneered the use of government-paid commentators with their 50 Cent Party, the countless commentators who steer online discussion in party-approved directions. According to a New Statesman interview with an anonymous 50 Cent Party member, the goal is “to guide the netizens’ thoughts, to blur their focus, or to fan their enthusiasm for certain ideas.” This aligns with a speech by former Chinese President Hu Jintao to the Politburo in 2007, in which he called for the organization to “assert supremacy over online public opinion” and “study the art of online guidance.” Like Veneditkov and others in Russia, members of the Chinese government see this guidance as being necessary to protect their leadership against foreign attempts to stir up unrest. Last May, the People’s Liberation Army publiclyclaimed that “Western hostile forces along with a small number of Chinese ‘ideological traitors’ have maliciously attacked the Communist Party of China, and smeared our founding leaders and heroes, with the help of the Internet. . . . Their fundamental objective is to confuse us with ‘universal values,’ disturb us with ‘constitutional democracy,’ and eventually overthrow our country through ‘color revolution.’”